With the advent of cloud and better technologies, more organizations are moving to Software-as-service (SAAS) models to simplify their delivery process. As we get access to better tools and technology, it is less complex to achieve this delivery model. In this whitepaper, we will walk through the key architectural elements/design of deploying a Clinical Data Repository (FHIR repository) on the cloud in a multi-tenant format. We will look at how to isolate tenants within an EKS cluster, automate Tenant onboarding, and support routing of Tenant workloads. While Blanc Labs used AWS EKS (Amazon Elastic Kubernetes Service) for this model, it is important to note that the same principle can be applied to any Kubernetes cluster.

The piece is intended for IT architects, developers and DevOps, who have practical experience architecting in the AWS Cloud with EKS/Kubernetes.

A major Canadian healthtech company that focuses on remote patient monitoring using innovative technology, was looking for the right partner to help them build a web-based SaaS application that could capture patient monitoring data via IoT devices and manual entries and store that data in a cloud based clinical repository. Blanc Labs was able to implement this solution with the Smile Digital Health clinical data repository on AWS HIPA compliant cloud infrastructure.

The result of our solution, detailed below, led to increased adaptability to interoperability standards, as well as an increase in market reach and sales.

Challenges Around Switching to an FHIR Compliant Clinical Repository

Installation

Installation of any FHIR repository itself is not a big challenge, and in most cases, FHIR repository vendors provide detailed documentation on how to install the repository. However, the complexities begin when there is customization required based on your multitenancy need. Do you want to install one repository for all your tenants, or should each Tenant have its own repository? Another important consideration is if the vendor is charging for repository installation and does the FHIR Repository support multi-tenancy by default.

Multi-tenancy

Multi-tenancy brings another level of complexity to the overall architecture. How can you onboard new tenants quickly? Does your cloud provider support multi- tenancy? Will each Tenant have its own cloud service or can it be shared in a secure way to reduce the overall cost of installation, operation, and maintenance?

Upgrade

Data repository vendors keep releasing new versions of the repository. It is important to keep upgrading your FHIR repository. This can be challenging if you don’t keep your FHIR repository up to date and decide to upgrade many years later.

Scalability

Some scalability issues have been solved by Kubernetes but replicating the infrastructure in a different geographical region continues to be the most challenging task. It is also important in the healthcare domain because some countries have different jurisdiction requirements on where to keep the clinical data. In this scenario, we must replicate the same infrastructure in the same geographical locations.

Building Multi-Tenancy Architecture

To systematically tackle the above-mentioned challenges, we can take a three- step approach:

- Tenant Isolation Model

- Routing traffic to tenants

- Onboarding new tenants through automation

Tenant Isolation Model

There are multiple ways to solve multi-tenancy issues, each with its own pros & cons.¹

In the world of AWS EKS (Kubernetes) Tenant per namespace is a good blend of isolation and cost-efficiency. Tenant Isolation can be easily achieved through Network Policies. You can configure a Network Policy to allow traffic coming from a specific namespace only and deny all others.

The question arises, how will you place your FHIR repository? You have two options here:

- Deploy individual FHIR repositories for each Tenant

- Share the FHIR repository among all Tenants

This decision will be based on which FHIR Repository you choose and the cost efficiency you are aiming for.

Individual FHIR Repository for each Tenant

This approach gives you complete data isolation protection but comes with high operational costs. When using AWS, you end up with multiple DB instances for each FHIR repository if you decided to go with RDS (Amazon Relational Database). Along with Database and cloud services costs, there are other things you need to consider when choosing this approach. For example, how easy is installing the FHIR repository through automation scripts? Or, how much time will it take for each installation? Remember, our goal is to onboard a new Tenant quickly and automatically.

Shared FHIR Repository

Some clinical data repositories do provide multi-tenancy support but it’s important to look at it from an end-to-end context: how data will flow into the clinical repository, implications for data security, and other wrapper services or systems that will interact with clinical data.

If your FHIR repository supports multi-tenancy, then you can deploy on a shared name namespace and Tenant-specific services (wrapper services) will interact directly. In this approach, any traffic must come from Tenant wrapper services only. No direct external traffic is allowed to go to the shared repository.

The benefit of this approach is that when you bring in a new Tenant, you don’t need to install the FHIR repository from scratch. All you need is to deploy wrapper services for the new Tenant, which is comparatively easy using automation scripts.

Tenant isolation is not the only problem to solve in this architecture. How to route Traffic to tenants is another important question.

Routing Traffic to Tenants

The architecture diagram above illustrates how to route internet traffic to your pods. There are two approaches for routing traffic:

- Using traefik Ingress controller (depicted in the above diagram)

- Using AWS load Balancer

Routing Traffic Using Traefik Controller

Traefik is a cloud-native scalable ingress proxy. It can be used as a single point of entry to the cluster and it forwards the traffic to the corresponding backends. Here we can split the traffic based on subdomains, paths, headers and apply “middlewares” for transforming the incoming requests.

Routing Traffic Using AWS Load Balancer on EKS

AWS Load balancer is also an ingress controller. It creates corresponding AWS resources like AWS Application load balancer when you deploy this controller on your EKS cluster.²

Onboarding New Tenants Through Automation

To onboard a new Tenant successfully, you need a bunch of configuration/ resources up and running in the cloud and on your EKS cluster. Creating those configurations/resources manually takes a lot of time, and it’s nearly impossible if your sales team brings new tenants to the platform daily. Also, it adds risk to the production system, where you change configurations daily.

Hence, without automation, the effort of building the entire SAAS platform is lost.

Automation must be done in two layers:

- Infrastructure

- EKS/Kubernetes (on-boarding new tenants)

Infrastructure

The infrastructure-as-a-code concept is a lifesaver in this layer of automation. All large cloud providers support popular third-party platforms like Terraform, Ansible, chef, etc., to manage automated infrastructure. You can also achieve the same flexibility using native cloud solutions like AWS Cloud-formation or Azure Resource Manager.

Once you have scripts ready, you can easily replicate the infrastructure in different geographical locations whenever it is required. This layer helps you to achieve some degree of scalability.

In this layer, you end up creating cloud resources like VPCs, subnets, security groups, EKS, and other supporting resources like S3 (Storage), RDS, etc. Also, you need to set up some base resources on your EKS cluster like Istio, networking logging, and monitoring resources.

EKS / Kubernetes (On-boarding New Tenants)

Onboarding new tenants through a frictionless process is the key component of a multi-tenancy offering. The absence of this component defeats the purpose of SAAS offering if onboarding a Tenant takes a long time.

This process is a composite of multiple moving parts. Orchestration of these moving parts is required to get the Tenant up and running quickly. Through automation, we can achieve a low-friction process.

We can automate multiple subprocesses like creating new namespaces, configuring the Tenant Role & policies, and using Terraform, AWS CLI, and Bash Scripts. Each Tenant must access only those resources to which the Tenant is entitled. Cognito User pool, S3 buckets, SQS, DB schemas, and FHIR repository are a few examples.

Also, along with creating the above resources, scripts have to deploy wrapper & other supporting services if required.

These automation scripts give you the flexibility to build a Tenant Management app where the Tenant or Sales Team can onboard a new Tenant at the click of a button.

Key Learnings and Conclusion

At Blanc Labs we were successfully able to architect & deliver a Multi-Tenant SaaS solution to our healthcare client using the design provided in this whitepaper.

The biggest challenge we encountered in the process was the development of automation scripts whose complexity could increase considerably if not designed in the right fashion. We were able to modularize and document the scripts in a way that is easy to maintain for further enhancements. Automation scripts can also be configured in client’s CI/CD pipelines. This will allow clients to deploy new tenants and create secure & high available infrastructure with a click of a button.

As a new immigrant to Canada, fitting into the working culture was a concern. But the trust and support from managers and colleagues erased those worries. I’ve had the privilege of establishing a new practice and managing projects.

I feel at home at Blanc Labs. Here, I am encouraged to learn, grow, and pursue new levels of excellence every day. The leadership has been instrumental as they have nurtured me to rise to new heights and for that, I am grateful.

The culture at Blanc Labs is built on respect, empowerment and teamwork. It is a great place to grow. You need to be a constant learner and a real team player to succeed. It’s always about helping each other to get the best possible result, not about who’s getting the credit.

Blanc Labs has been so empowering for me: the learning, the certifications, the opportunity to work with clients, the teamwork – my work family, really.

As a new immigrant to Canada, fitting into the working culture was a concern. But the trust and support from managers and colleagues erased those worries. I’ve had the privilege of establishing a new practice and managing projects.

I feel at home at Blanc Labs. Here, I am encouraged to learn, grow, and pursue new levels of excellence every day. The leadership has been instrumental as they have nurtured me to rise to new heights and for that, I am grateful.

The culture at Blanc Labs is built on respect, empowerment and teamwork. It is a great place to grow. You need to be a constant learner and a real team player to succeed. It’s always about helping each other to get the best possible result, not about who’s getting the credit.

Blanc Labs has been so empowering for me: the learning, the certifications, the opportunity to work with clients, the teamwork – my work family, really.

As a new immigrant to Canada, fitting into the working culture was a concern. But the trust and support from managers and colleagues erased those worries. I’ve had the privilege of establishing a new practice and managing projects.

I feel at home at Blanc Labs. Here, I am encouraged to learn, grow, and pursue new levels of excellence every day. The leadership has been instrumental as they have nurtured me to rise to new heights and for that, I am grateful.

The culture at Blanc Labs is built on respect, empowerment and teamwork. It is a great place to grow. You need to be a constant learner and a real team player to succeed. It’s always about helping each other to get the best possible result, not about who’s getting the credit.

Blanc Labs has been so empowering for me: the learning, the certifications, the opportunity to work with clients, the teamwork – my work family, really.

Latest Insights

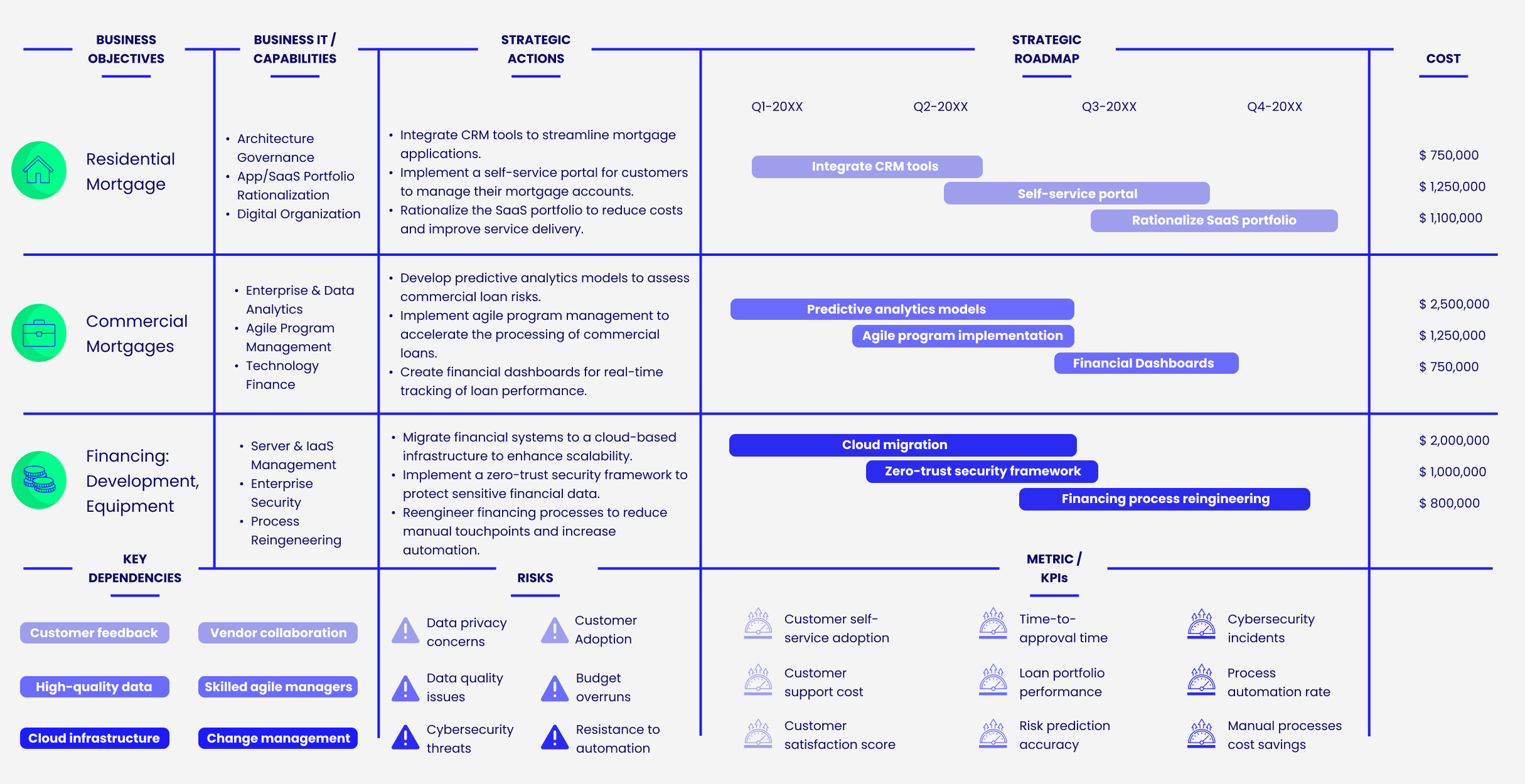

Lenders Transformation Playbook: Bridging Strategy and Execution

AI’s Mid-Market Makeover in Financial Services

Mid-sized financial services institutions (FIs) are facing significant challenges during this period of rapid technological change, particularly with the rise of artificial intelligence (AI). As customer expectations grow, smaller banks and lenders must stay competitive and responsive. Canada’s largest financial institutions are already advancing in AI, while many others remain in ‘observer’ mode, hesitant to invest and experiment. Yet, mid-sized FIs that adopt the right strategy have unique agility, allowing them to adapt swiftly and efficiently to technological disruptions—even more so than their larger counterparts.

Process Improvement and Automation Support the Mission at Trez Capital 🚀

Trez distributes capital based on very specific criteria. But with over 300 investments in their portfolio, they process numerous payment requests and deal with documents in varied data formats. They saw an opportunity to enhance efficiency, improve task management, and better utilize data insights for strategic decision-making.

Align, Assemble, Assure: A Framework for AI Adoption

An in-depth guide for adopting and scaling AI in the enterprise using actionable and measurable steps.

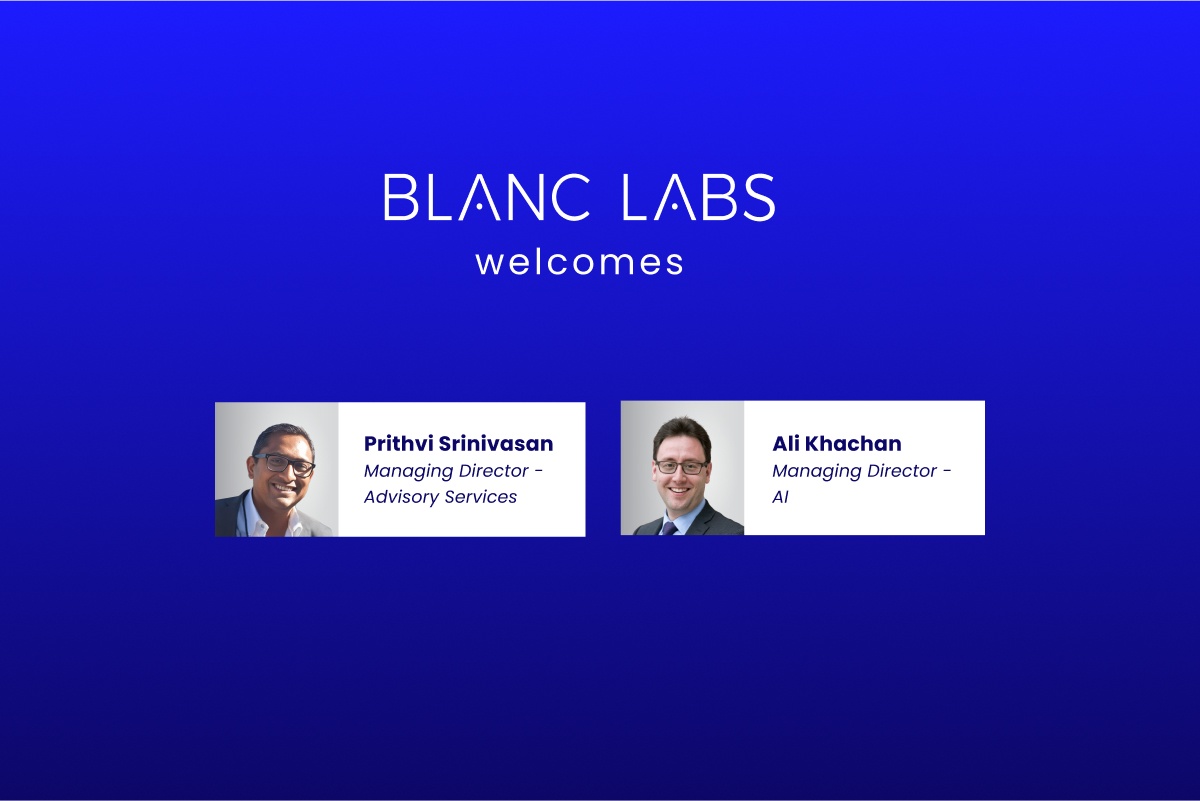

Blanc Labs Welcomes Two New Leaders to Advance AI Innovation and Enhance Tech Advisory Services for Financial Institutions Across North America

Blanc Labs and TCG Process have partnered to transform lending operations with innovative automation solutions, using the DocProStar platform to enhance efficiency, compliance, and customer satisfaction in the Canadian lending market.

Blanc Labs Partners with TCG Process to Integrate their Automation and Orchestration Platform and deliver Advanced Intelligent Workflow Automation to Financial Institutions

Blanc Labs and TCG Process have partnered to transform lending operations with innovative automation solutions, using the DocProStar platform to enhance efficiency, compliance, and customer satisfaction in the Canadian lending market.

BPI in Banking and Financial Services in the US & Canada

Banking and financial services are changing fast. Moving from old, paper methods to new, digital ones is key to staying in business. It’s important to think about how business process improvement (BPI) can help.

Business Process Improvement vs Business Process Reengineering

Business process improvement vs. reengineering is a tough choice. In this guide, we help you choose between the two based on four factors.

What is the role of a Business Process Improvement Specialist?

A business process improvement specialist identifies bottlenecks and inefficiencies in your workflows, allowing you to focus efforts on automating the right processes.

Open Banking Technology Architecture Whitepaper

We’ve developed this resource to help technical teams adopt an Open Banking approach by explaining a high-level solution architecture that is organization agnostic.

Winning the Open Banking Race: A Challenger’s Path to Entering the Ecosystem Economy

Learn about the steps you can take in forming an open banking strategy and executing on it.

Canadian IT services firms offer a strategic opportunity for US Banks and FIs

Discover the strategic advantage for U.S. banks embracing innovation with cost-effective Canadian nearshore IT support.

These are not your grandmother’s models: the impact of LLM’s on Document Processing

Explore the transformative influence of large language models (LLMs) on document processing in this insightful article. Discover how these cutting-edge models are reshaping traditional approaches, unlocking new possibilities in data analysis, and revolutionizing the way we interact with information.

From Chaos to Clarity: Achieving Operational Excellence through Business Process Improvement

Discover transformative insights and strategies to streamline operations, enhance efficiency, and drive success.

Digital Transformation Vs. IT Modernization: What’s the Difference?

Healthcare Interoperability: Challenges and Benefits

Navigating the Healthcare Interoperability Journey

Finding the right API Management Platform

Lenders Transformation Playbook: Bridging Strategy and Execution

AI’s Mid-Market Makeover in Financial Services

Mid-sized financial services institutions (FIs) are facing significant challenges during this period of rapid technological change, particularly with the rise of artificial intelligence (AI). As customer expectations grow, smaller banks and lenders must stay competitive and responsive. Canada’s largest financial institutions are already advancing in AI, while many others remain in ‘observer’ mode, hesitant to invest and experiment. Yet, mid-sized FIs that adopt the right strategy have unique agility, allowing them to adapt swiftly and efficiently to technological disruptions—even more so than their larger counterparts.

Process Improvement and Automation Support the Mission at Trez Capital 🚀

Trez distributes capital based on very specific criteria. But with over 300 investments in their portfolio, they process numerous payment requests and deal with documents in varied data formats. They saw an opportunity to enhance efficiency, improve task management, and better utilize data insights for strategic decision-making.

Align, Assemble, Assure: A Framework for AI Adoption

An in-depth guide for adopting and scaling AI in the enterprise using actionable and measurable steps.

Blanc Labs Welcomes Two New Leaders to Advance AI Innovation and Enhance Tech Advisory Services for Financial Institutions Across North America

Blanc Labs and TCG Process have partnered to transform lending operations with innovative automation solutions, using the DocProStar platform to enhance efficiency, compliance, and customer satisfaction in the Canadian lending market.

Blanc Labs Partners with TCG Process to Integrate their Automation and Orchestration Platform and deliver Advanced Intelligent Workflow Automation to Financial Institutions

Blanc Labs and TCG Process have partnered to transform lending operations with innovative automation solutions, using the DocProStar platform to enhance efficiency, compliance, and customer satisfaction in the Canadian lending market.

BPI in Banking and Financial Services in the US & Canada

Banking and financial services are changing fast. Moving from old, paper methods to new, digital ones is key to staying in business. It’s important to think about how business process improvement (BPI) can help.

Business Process Improvement vs Business Process Reengineering

Business process improvement vs. reengineering is a tough choice. In this guide, we help you choose between the two based on four factors.

What is the role of a Business Process Improvement Specialist?

A business process improvement specialist identifies bottlenecks and inefficiencies in your workflows, allowing you to focus efforts on automating the right processes.

Open Banking Technology Architecture Whitepaper

We’ve developed this resource to help technical teams adopt an Open Banking approach by explaining a high-level solution architecture that is organization agnostic.

Winning the Open Banking Race: A Challenger’s Path to Entering the Ecosystem Economy

Learn about the steps you can take in forming an open banking strategy and executing on it.

Canadian IT services firms offer a strategic opportunity for US Banks and FIs

Discover the strategic advantage for U.S. banks embracing innovation with cost-effective Canadian nearshore IT support.

These are not your grandmother’s models: the impact of LLM’s on Document Processing

Explore the transformative influence of large language models (LLMs) on document processing in this insightful article. Discover how these cutting-edge models are reshaping traditional approaches, unlocking new possibilities in data analysis, and revolutionizing the way we interact with information.

From Chaos to Clarity: Achieving Operational Excellence through Business Process Improvement

Discover transformative insights and strategies to streamline operations, enhance efficiency, and drive success.

Digital Transformation Vs. IT Modernization: What’s the Difference?

Healthcare Interoperability: Challenges and Benefits

Navigating the Healthcare Interoperability Journey

Finding the right API Management Platform

Lenders Transformation Playbook: Bridging Strategy and Execution

AI’s Mid-Market Makeover in Financial Services

Mid-sized financial services institutions (FIs) are facing significant challenges during this period of rapid technological change, particularly with the rise of artificial intelligence (AI). As customer expectations grow, smaller banks and lenders must stay competitive and responsive. Canada’s largest financial institutions are already advancing in AI, while many others remain in ‘observer’ mode, hesitant to invest and experiment. Yet, mid-sized FIs that adopt the right strategy have unique agility, allowing them to adapt swiftly and efficiently to technological disruptions—even more so than their larger counterparts.

Process Improvement and Automation Support the Mission at Trez Capital 🚀

Trez distributes capital based on very specific criteria. But with over 300 investments in their portfolio, they process numerous payment requests and deal with documents in varied data formats. They saw an opportunity to enhance efficiency, improve task management, and better utilize data insights for strategic decision-making.

Align, Assemble, Assure: A Framework for AI Adoption

An in-depth guide for adopting and scaling AI in the enterprise using actionable and measurable steps.

Blanc Labs Welcomes Two New Leaders to Advance AI Innovation and Enhance Tech Advisory Services for Financial Institutions Across North America

Blanc Labs and TCG Process have partnered to transform lending operations with innovative automation solutions, using the DocProStar platform to enhance efficiency, compliance, and customer satisfaction in the Canadian lending market.

Blanc Labs Partners with TCG Process to Integrate their Automation and Orchestration Platform and deliver Advanced Intelligent Workflow Automation to Financial Institutions

Blanc Labs and TCG Process have partnered to transform lending operations with innovative automation solutions, using the DocProStar platform to enhance efficiency, compliance, and customer satisfaction in the Canadian lending market.

BPI in Banking and Financial Services in the US & Canada

Banking and financial services are changing fast. Moving from old, paper methods to new, digital ones is key to staying in business. It’s important to think about how business process improvement (BPI) can help.

Business Process Improvement vs Business Process Reengineering

Business process improvement vs. reengineering is a tough choice. In this guide, we help you choose between the two based on four factors.

What is the role of a Business Process Improvement Specialist?

A business process improvement specialist identifies bottlenecks and inefficiencies in your workflows, allowing you to focus efforts on automating the right processes.

Open Banking Technology Architecture Whitepaper

We’ve developed this resource to help technical teams adopt an Open Banking approach by explaining a high-level solution architecture that is organization agnostic.

Winning the Open Banking Race: A Challenger’s Path to Entering the Ecosystem Economy

Learn about the steps you can take in forming an open banking strategy and executing on it.

Canadian IT services firms offer a strategic opportunity for US Banks and FIs

Discover the strategic advantage for U.S. banks embracing innovation with cost-effective Canadian nearshore IT support.

These are not your grandmother’s models: the impact of LLM’s on Document Processing

Explore the transformative influence of large language models (LLMs) on document processing in this insightful article. Discover how these cutting-edge models are reshaping traditional approaches, unlocking new possibilities in data analysis, and revolutionizing the way we interact with information.

From Chaos to Clarity: Achieving Operational Excellence through Business Process Improvement

Discover transformative insights and strategies to streamline operations, enhance efficiency, and drive success.